The digital world keeps changing, and too it's almost a daily thing, with new tools appearing all the time. One area that gets a lot of talk is artificial intelligence, especially when it comes to images. People are seeing what AI can do with pictures, and it's quite something. Some of these tools can change how a photo looks in ways we never thought possible, just a little while ago. This kind of technology brings up a lot of thoughts about what is right and what might be wrong, you know?

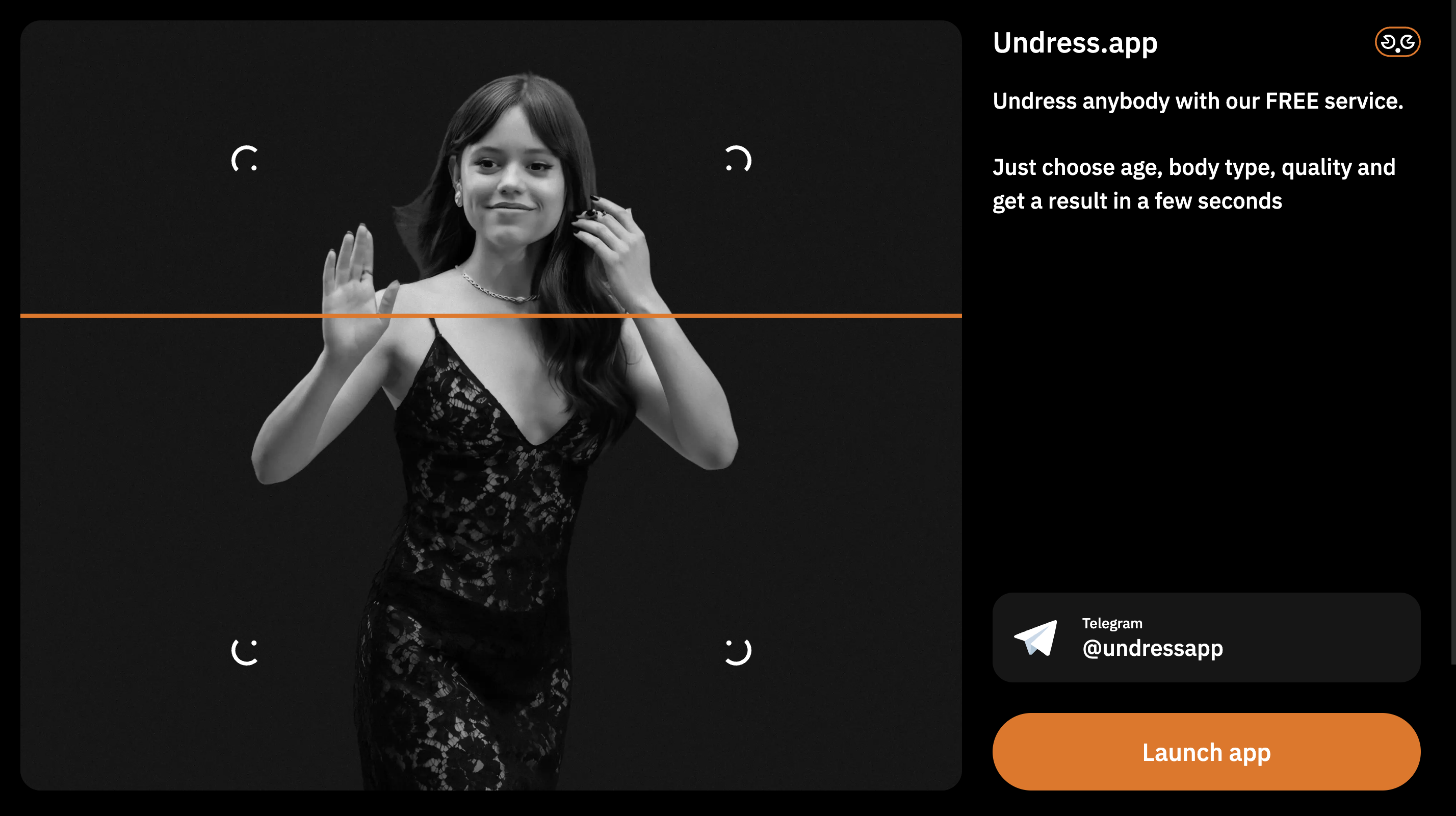

As we look ahead to 2025, the idea of an "undress AI free remover" is something that really gets people talking. It points to a kind of AI that could change clothing in pictures, or even take it away. My text, actually, describes tools that claim to "undress any photo, remove or change clothes using our online tool," and say "no photo editing skills required!" It also mentions "Virbo AI clothes remover" and "Unclothy," which are AI tools "designed to undress photos" and "automatically detect and remove clothing." This technology is out there, and it's getting better, seemingly.

This topic is very important because it touches on big ideas like privacy, personal permission, and what we consider real online. It makes us think about the kind of digital space we want to build. So, let's take a closer look at what this technology might mean for us, and why it's something we should all be aware of, in a way, as we move forward.

- Emily Compagno Children

- Bumpy Johnson Daughter

- Who Is The Richest Wayans Brother

- How Many Years Did Casey Anthony Get

- Is Emily Compagno Italian Or Spanish

Table of Contents

- What 'Undress AI' Implies

- The Ethical Minefield: Consent and Privacy

- The Dangers of Misuse: Deepfakes and Harm

- Impact on Individuals and Society

- The Role of Platforms and Legislation in 2025

- Protecting Yourself in a Digital World

- The Path Forward: Responsible AI Development

- Frequently Asked Questions

What 'Undress AI' Implies

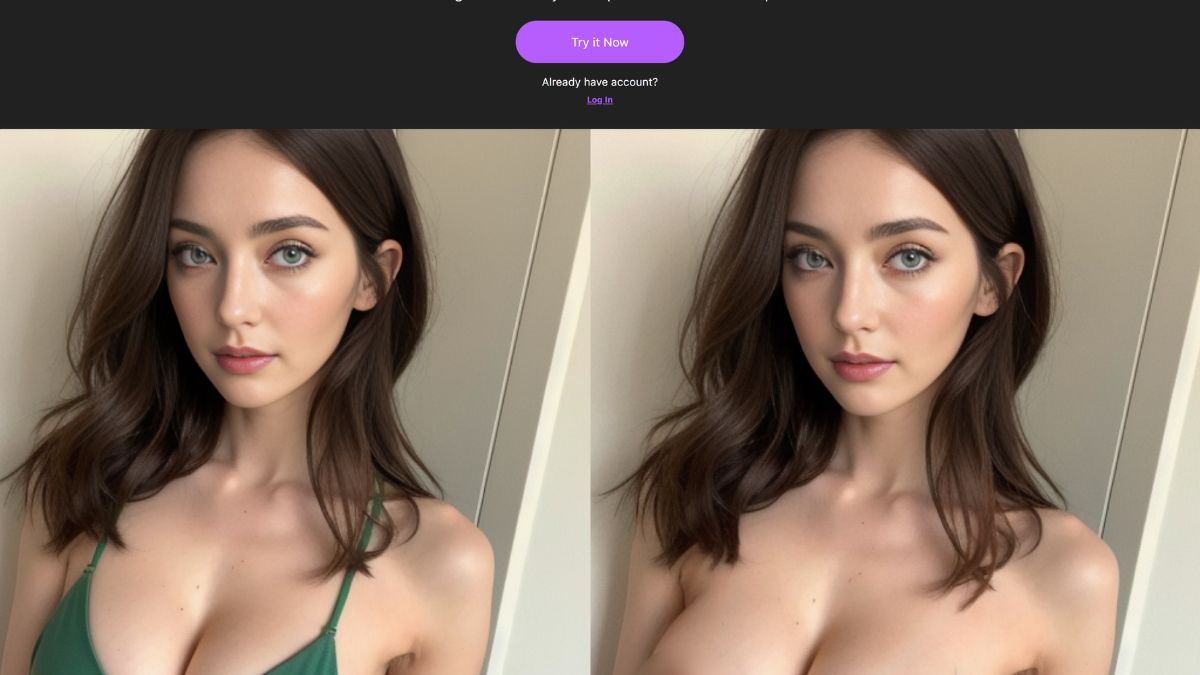

When people talk about an "undress AI free remover 2025," they are talking about a type of artificial intelligence that can change how clothes appear in a picture, or even make them disappear. My text mentions that such tools "digitally transform images by removing clothing." It also talks about "photo editor for removing clothes on photos" and "our undress AI remover creates trendy video effects by digitally removing outer clothing like jackets." This technology works by using very smart computer programs that have learned from many pictures. They can guess what a person's body might look like under clothes. Then, they create new pixels to show that. It's a bit like a digital artist working very fast, but without a human telling it what to do, just the program's rules. This kind of AI is becoming more and more common, which is why we need to talk about it, you know?

The idea of a "free remover" suggests that these tools could be easy for anyone to get and use, without paying anything. This makes the discussion even more important. If something like this is widely available, it means many more people could use it. Some might use it for fun, maybe to create a silly picture. Others, however, might use it in ways that are not good. This is where the big concerns start to come up. We have to consider the wide reach of such tools. It's not just about the technology itself, but what happens when it gets into everyone's hands, pretty much.

So, while the technology is pretty impressive in what it can do, the real question is about how it gets used. It's about the people who make these tools, and the people who use them. The focus isn't on how to use such a tool, but rather on understanding its existence and the broader implications. This is a topic that requires a good deal of thought, actually, from everyone involved in the digital space.

- Are Turkish People Oghuz Turks

- Caylee Pendergrass Wikipedia

- What Was Bumpy Johnson Locked Up For

- What Happened To Emily Compagno

- Is Gunther Eagleman A Real Person

The Ethical Minefield: Consent and Privacy

The biggest issue with any "undress AI" tool is consent. When a picture is taken, the person in the picture usually agrees to it being taken. They also usually agree to how that picture will be used. But with AI tools that can change clothes, the person in the picture might not know their image is being changed. They certainly did not give permission for their clothes to be altered or removed. This is a very serious matter. It takes away a person's control over their own image and how they are seen. This is a basic right, after all, to control your own body and how it is shown to the world. It’s a very personal thing, you know?

Privacy is another huge concern. Our clothes are a part of our privacy. They cover our bodies and keep us from being seen in ways we do not want. If AI can remove clothes from a picture, it can take away that privacy. This can happen without the person even knowing. Imagine a picture of you, taken innocently, being changed by someone else. That would feel like a big invasion. It's like someone is looking at you in a way you never agreed to. This is why these tools are so troubling. They break down the walls of personal space, in a way, that we all expect to have.

The idea of a "free remover" makes this privacy problem even worse. If these tools are free and easy to get, then more people might use them. This means more pictures could be changed without permission. It creates a situation where anyone's image could be at risk. This is a really big worry for digital safety. We need to think about how we can protect ourselves and others from such uses. It's something that needs our attention, seriously, as we move into the future.

The Dangers of Misuse: Deepfakes and Harm

The misuse of "undress AI" tools can lead to something called deepfakes. A deepfake is a picture or video that looks real but is actually fake. It's created by AI. When these tools are used to remove clothes from someone's picture, they create a deepfake that can be very harmful. This kind of deepfake is often called non-consensual intimate imagery, or NCII. It means pictures of a person in a private way, made without their permission. This is a very serious problem, as a matter of fact.

The harm from such deepfakes can be huge. People who are the target of NCII can feel very hurt, embarrassed, and even unsafe. Their reputation can be ruined. Their relationships can suffer. It can cause a lot of emotional pain. These fake images can spread very quickly online, and once they are out there, it's very hard to get them back. The internet does not forget easily, you know? This makes the damage last a long time. It's not just a small prank; it's a real attack on a person's life.

Beyond individual harm, the spread of deepfakes like this can also make it harder for us to trust what we see online. If we can't tell what's real and what's fake, it makes everything confusing. This can affect how we get our news, how we see public figures, and even how we interact with each other. It breaks down trust in the digital world. So, these tools are not just a problem for one person; they are a problem for everyone. It's a truly concerning development, honestly.

Impact on Individuals and Society

For individuals, the impact of having their image altered without consent can be devastating. People might face bullying, harassment, and even threats. They might feel isolated and alone. The feeling of having their personal space violated can be very strong. It can affect their mental well-being, leading to anxiety or depression. Some might even feel they have to leave social media or change their lives to escape the harm. This is a very real personal struggle, obviously.

On a broader level, for society, the widespread availability of "undress AI free remover 2025" tools could change how we think about privacy in general. It might make people more afraid to share pictures online, even innocent ones. This could make our online communities less open and less fun. It could also make it harder for law enforcement to tell what is real evidence and what is fake. This is a big challenge for justice, you know?

There is also the risk of these tools being used to target specific groups of people. Women, for example, are often the main targets of NCII. This technology could make it easier to create and spread harmful content against them. This would make the internet a less safe place for many people. It would add to existing problems of online abuse. So, it's not just about one person's picture; it's about making the entire digital world a better or worse place, basically.

The Role of Platforms and Legislation in 2025

Social media platforms and other online services have a very important role to play here. They are the ones where most of these images are shared. So, they need strong rules against deepfakes and NCII. They also need ways to quickly find and take down such harmful content. This is not an easy job, but it is necessary. My text mentions WhatsApp Web allowing easy sharing of messages and files. This shows how quickly things can spread. Platforms need to invest in technology and people to fight this misuse. They have a big responsibility, you know?

Looking to 2025, laws and rules about AI are likely to become even more important. Governments around the world are already talking about how to control AI. There might be new laws that make it illegal to create or share deepfakes without consent. Some places already have such laws. These laws would give victims a way to fight back. They would also try to stop people from making this kind of harmful content in the first place. It's a complex area, but very important for keeping people safe, naturally.

It's also about making sure that the companies that create AI tools are responsible. They should think about how their tools might be used in bad ways. They should build safeguards into their technology. They should also work with governments and experts to make sure AI is developed in a way that helps people, not harms them. This is a shared job for everyone involved in AI. It's not just about what the technology can do, but what it *should* do, and stuff.

Protecting Yourself in a Digital World

Given the existence of tools like "undress AI free remover 2025," protecting yourself online becomes even more important. One simple step is to be careful about what pictures you share publicly. Think twice before posting a photo that could be misused. Even if it seems harmless, a picture can be taken and changed. It's a good habit to keep in mind. You know, just a little caution goes a long way.

Another way to protect yourself is to understand how deepfakes work. Knowing that AI can create very real-looking fake images can help you be more critical of what you see online. If something looks too good to be true, or just feels off, it might be a deepfake. Don't always believe everything you see, especially if it seems shocking or unbelievable. This kind of thinking is really helpful, honestly.

If you ever find yourself a victim of deepfakes or NCII, it's important to know you are not alone. There are organizations and resources that can help. You can report the content to the platform where it's shared. You can also talk to law enforcement. Getting support is very important. Learning more about digital safety on our site can give you practical steps. And link to this page online protection tips for more ideas. Don't be afraid to ask for help, really.

The Path Forward: Responsible AI Development

The existence of tools like "undress AI free remover 2025" highlights a bigger point: the need for responsible AI development. As AI becomes more powerful, the people who create it have a very big responsibility. They need to think about the ethical side of their work. They need to consider all the ways their technology could be used, both good and bad. It's not enough to just make something work; it also has to be safe and fair, you know?

This means building AI with ethics in mind from the very beginning. It means having clear rules and guidelines for how AI should be used. It also means educating people about AI, so everyone understands its power and its risks. We need more talks about AI ethics, not less. We need more research into how to stop AI from being misused. This is a shared effort, basically, that involves everyone from tech companies to everyday users.

The future of AI can be very exciting and helpful. AI can solve big problems and make our lives better. But to get to that good future, we have to deal with the challenges that come with powerful technology. We need to make sure that AI is used to build a safer, more respectful digital world for everyone. This means making choices that put people's well-being and consent first. It's a big task, but a necessary one, for sure.

Frequently Asked Questions

Is 'undress AI' legal?

The legality of 'undress AI' tools, especially those that create non-consensual intimate imagery, is a complex and evolving area. Many countries are passing laws that make it illegal to create or share such content without the person's permission. These laws aim to protect individuals from digital harm. However, the laws can vary by place, and keeping up with fast-changing technology is a challenge for lawmakers, too it's almost a constant struggle. So, while the tools might exist, their use for harmful purposes is increasingly against the law in many places.

How can I tell if an image has been altered by AI?

It can be very hard to tell if an image has been altered by AI, especially as the technology gets better. AI-generated images can look very real. However, sometimes there are small clues, like strange details in the background, odd lighting, or unnatural body parts. There are also tools being developed that can help detect AI-generated content, but they are not perfect. The best way to approach this is with a healthy dose of skepticism. If something looks suspicious or too perfect, it's worth questioning, you know?

What should I do if I find a deepfake of myself or someone I know?

If you find a deepfake of yourself or someone you know, it's important to act quickly and carefully. First, you should report the content to the platform where you found it. Most social media sites have ways to report harmful content. You should also consider gathering evidence, like screenshots, but avoid sharing the harmful image further. You might also want to contact law enforcement, as creating and sharing such content can be a crime. There are also support organizations that can help victims of online abuse. Remember, you don't have to deal with this alone, basically.

Related Resources:

Detail Author:

- Name : Jerrell Nikolaus

- Username : quigley.barbara

- Email : guillermo74@hotmail.com

- Birthdate : 1986-11-18

- Address : 9127 Jay Orchard Romagueraton, ID 50200-6547

- Phone : 336.441.1345

- Company : Miller LLC

- Job : Veterinarian

- Bio : At architecto et explicabo dolore at perferendis. Nostrum et eveniet quas eos. Architecto modi odio quos quia voluptas optio. Et nam natus voluptate enim quo et.

Socials

instagram:

- url : https://instagram.com/fay5140

- username : fay5140

- bio : Aut enim molestiae necessitatibus iure. Amet eos rerum ab qui sit impedit eius.

- followers : 6500

- following : 1676

facebook:

- url : https://facebook.com/schoen2017

- username : schoen2017

- bio : Iusto doloremque eos ut. Voluptas sed ad ullam tempore voluptas nam.

- followers : 561

- following : 1459

twitter:

- url : https://twitter.com/fay2985

- username : fay2985

- bio : Est cumque sed iure totam soluta voluptatem quis quos. Qui magnam eum impedit voluptatem iste. Porro architecto ad eum omnis.

- followers : 6747

- following : 1011

linkedin:

- url : https://linkedin.com/in/schoenf

- username : schoenf

- bio : Veniam ipsa quo quo fugiat eos odit atque.

- followers : 778

- following : 2416

tiktok:

- url : https://tiktok.com/@fay8557

- username : fay8557

- bio : Omnis voluptas similique in qui quaerat.

- followers : 1434

- following : 2433