The conversation around digital content creation and artificial intelligence is, you know, really picking up speed these days. So, a lot of people are talking about tools like 'undress AI mod IPA,' which are, in a way, part of this bigger discussion about what AI can do with images and videos. It's a topic that, honestly, brings up quite a few thoughts about creativity, privacy, and, perhaps most importantly, responsible use. We're seeing, more or less, how quickly technology changes what's possible, and that includes how we make and look at pictures online.

It's interesting, too, how some of these tools get a lot of attention, sometimes for reasons that make us pause and think. The idea behind them, you see, often involves using clever computer programs to alter or generate images, sometimes in ways that seem quite realistic. This kind of capability, it really shows just how far AI has come, and it also highlights the need for a careful look at the implications for everyone online. There's a lot of talk, too, about the ethical side of things, as you might expect.

This article aims to, kind of, shed some light on what 'undress AI mod IPA' represents within the wider field of AI-generated content. We want to talk about the capabilities of such tools, the important questions they raise, and how we can, you know, approach digital creation with a strong sense of responsibility. It's about getting a clear picture of the landscape and understanding the conversations that are happening right now, which, in some respects, are very important for our digital future.

- What Is Emily Compagnos Religion

- Is Pulp Fiction Related To Get Shorty

- Emily Compagno Net Worth

- Who Is Emily Compagno From Fox News Engaged To

- What Happened To Bumpy Johnsons Daughter In Real Life

Table of Contents

- What is 'Undress AI Mod IPA'?

- The Appeal and the Concerns

- Ethical Implications of AI Image Generation

- Digital Privacy in the AI Era

- Responsible AI Use and Digital Citizenship

- Positive Applications of AI in Content Creation

- The Future Outlook for AI and Digital Content

- Frequently Asked Questions

- Conclusion

What is 'Undress AI Mod IPA'?

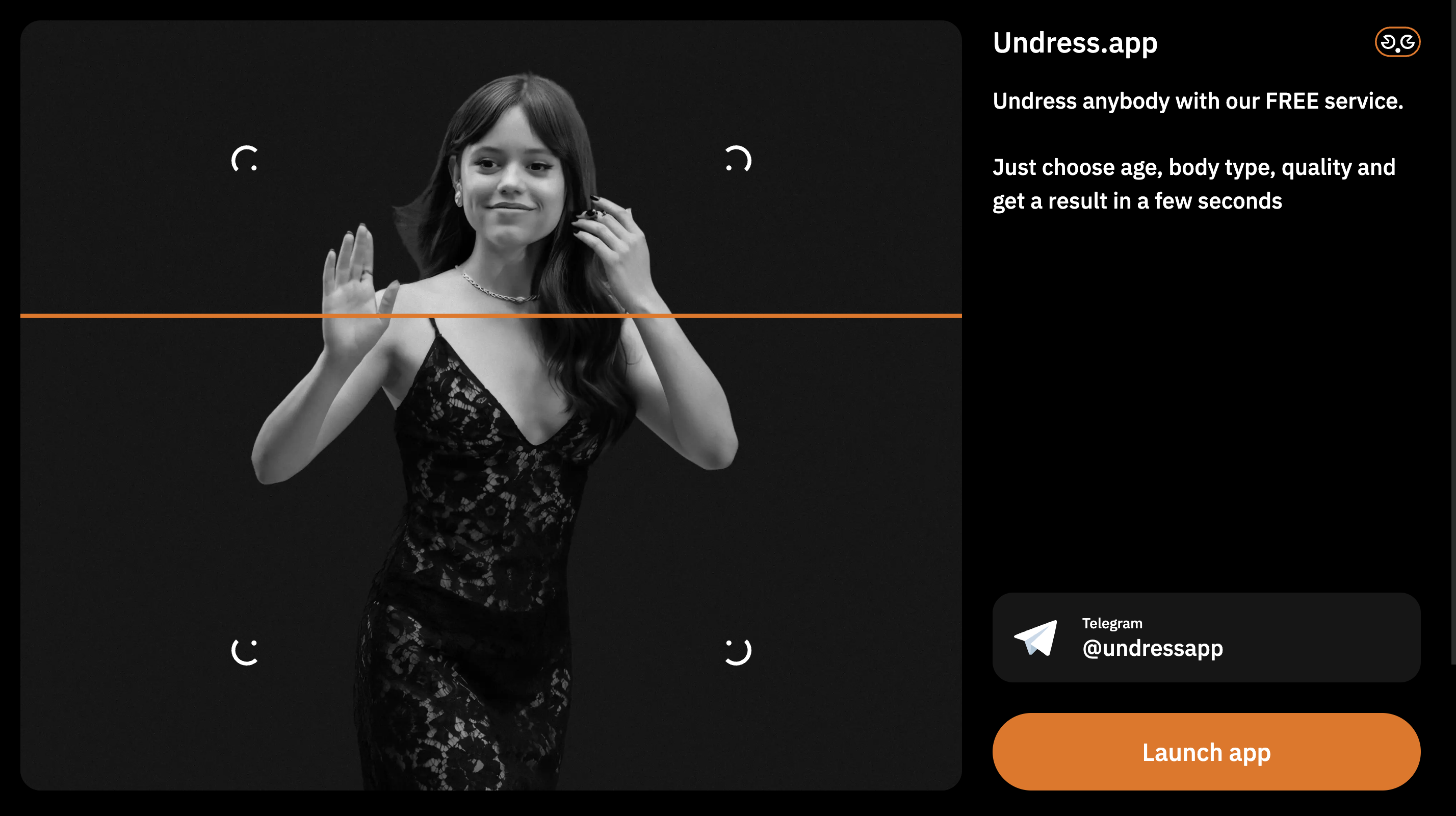

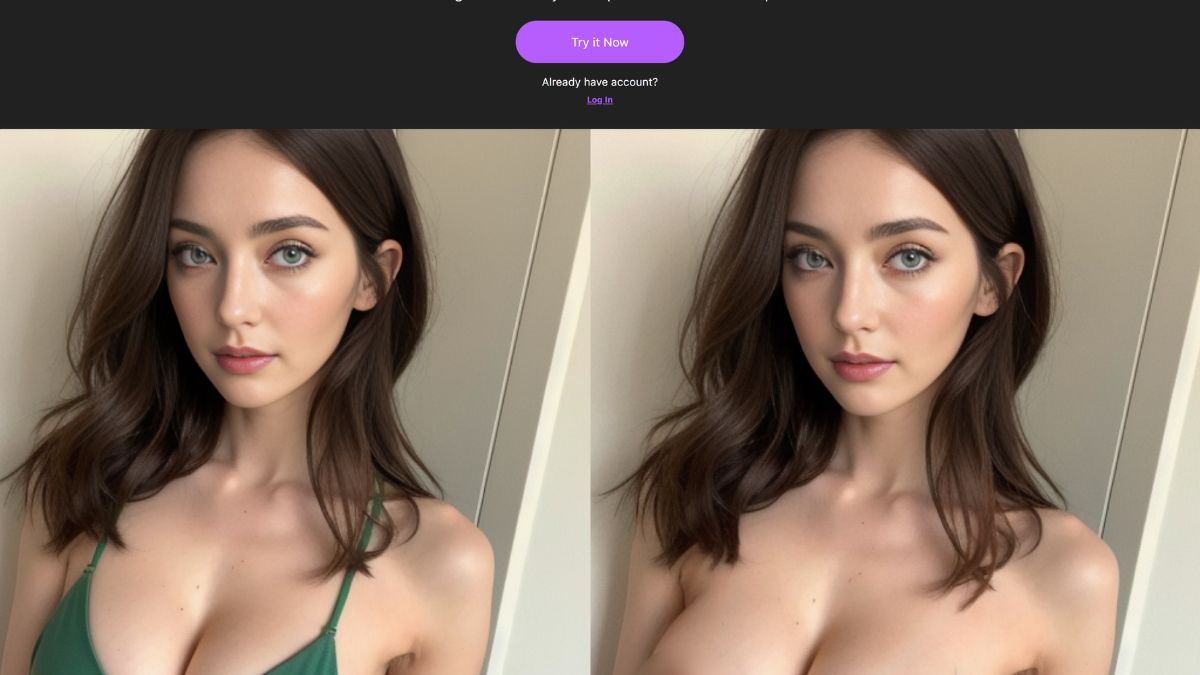

So, when people talk about 'undress AI mod IPA,' they're generally referring to a type of artificial intelligence application that, in a way, can manipulate or create digital images. This kind of tool, you know, works by using complex algorithms to change how a picture looks, sometimes by removing or altering clothing on a person in an image. It's a capability that, honestly, has sparked a lot of discussion across the internet, particularly about what's acceptable and what's not in the world of digital content.

These applications, you see, often use what we call deep learning techniques. That means they've been trained on, like, huge amounts of data to learn patterns and features in images. This training lets them, more or less, predict and generate new pixels that fit into an existing picture, making changes that can appear quite convincing. It's pretty fascinating, actually, how a computer can learn to do something like that, almost like an artist, but with code.

The "IPA" part often suggests that it might be an application package for Apple devices, like iPhones or iPads, though similar tools can exist on other platforms too. It's, you know, just a way of distributing the software. The core idea, though, is the AI's ability to modify images in a very specific, sometimes controversial, way. This particular kind of image manipulation, you know, raises some very serious questions about consent, digital rights, and the potential for misuse, which is why we need to talk about it openly.

- Mayme Johnson Actress

- Who Is The Richest Wayans Brother

- Mayme Hatcher Johnson Ethnicity

- Mayme Hatcher Johnson Age

- Bumpy Johnson Daughter

It's important to remember that the existence of such a tool doesn't, like, endorse its use for anything harmful. Rather, it highlights the need for everyone to be aware of what AI can do and, really, to think about the consequences of using these kinds of digital technologies. The conversation around 'undress AI mod IPA' is, in some respects, a loud signal that we need clear guidelines and a shared understanding of ethical boundaries in the digital space. The "high durability" of these discussions around AI ethics is, you know, something we're seeing play out every day.

The Appeal and the Concerns

The appeal of AI tools that modify images, in general, often comes from their ability to create something new or change existing content with relative ease. For some, it might be about exploring creative ideas, like, perhaps, generating unique digital art or even helping with design concepts. You could, for instance, imagine a graphic designer using AI to quickly prototype different looks for a character, or an artist exploring abstract forms. The idea of quickly producing various visual outcomes is, you know, pretty compelling for many creative people.

However, when we talk about specific tools like 'undress AI mod IPA,' the concerns, you know, tend to outweigh the potential positive uses very quickly. The main worry, honestly, is the potential for misuse, especially when it involves creating non-consensual images of individuals. This kind of activity, you see, can cause real harm, impacting a person's privacy, reputation, and emotional well-being. It's a serious ethical problem that, in a way, challenges our understanding of digital boundaries and personal space online.

There's also the broader issue of deepfakes and manipulated media, which 'undress AI mod IPA' is, perhaps, a specific instance of. These technologies make it harder for people to tell what's real and what's fake online, which, you know, can erode trust in digital information. If people can't trust what they see, it creates a lot of trouble for everything from news reporting to personal interactions. The "noise" around these kinds of issues is getting louder, and it's something we really need to address.

The conversation also touches on the responsibility of the creators of these AI models and the platforms that host them. Should there be, you know, more checks and balances to prevent harmful applications? How can we ensure that these powerful tools are used for good, or at least not for bad? These are questions that, honestly, don't have easy answers, but they are very important to keep asking as technology moves forward. It's like, we're building these powerful machines, and we need to make sure the "gears" are turning in the right direction, not making a "squeaking noise" of distress.

Ethical Implications of AI Image Generation

The ethical implications of AI image generation, especially with tools that can alter personal images, are, you know, pretty profound. At the very core, it's about consent. Creating or distributing images of someone that have been digitally altered, particularly in sensitive ways, without their explicit permission, is a serious violation of their personal autonomy and privacy. It's, honestly, a form of digital harm that can have lasting real-world consequences for the person involved. This is, in some respects, a major red flag for everyone.

Then there's the issue of exploitation. Tools like 'undress AI mod IPA' can be, you know, exploited to create content that is harmful, abusive, or even illegal. This raises questions about how we protect vulnerable individuals online and how we prevent technology from being used to facilitate such actions. It's a challenge that, really, requires a collective effort from technology developers, policymakers, and the general public to address effectively. We need to, like, ensure we're building a safer digital environment for everyone.

Another big concern is the spread of misinformation and disinformation. When AI can create highly realistic fake images, it becomes, you know, much harder to distinguish truth from fabrication. This can be used to spread false narratives, damage reputations, or even influence public opinion in deceptive ways. The potential for social disruption is, honestly, quite significant. We've seen, more or less, how quickly false information can spread on social media, and AI-generated content just adds another layer to that problem.

The development and deployment of AI tools, in general, should always consider these ethical frameworks. Developers have a responsibility to, you know, build safeguards into their technology to prevent misuse. Users, too, have a responsibility to think critically about the content they consume and share. It's about fostering a culture of digital responsibility, where the "idea" of maximum quality content also means ethical content. This is, you know, a very important part of the conversation we're having today.

Digital Privacy in the AI Era

Digital privacy, as you know, has always been a big topic, but in the age of advanced AI, it's becoming, you know, even more critical. When AI tools can take an existing image of a person and change it so significantly, it really highlights how vulnerable our digital likenesses can be. Our photos, which we might share casually online, could potentially be used in ways we never intended or consented to, which is, honestly, a pretty unsettling thought for many people.

The core of digital privacy is about control over your personal information, and that absolutely includes your image. When AI can manipulate images without consent, it takes away that control. This is, perhaps, one of the most pressing issues with tools like 'undress AI mod IPA.' It's not just about what the AI can do, but about the lack of agency the individual has over their own representation once their image is out there. It's a fundamental breach, in a way, of personal boundaries.

We need stronger protections and clearer legal frameworks to address these emerging challenges. Current laws, you see, might not always be equipped to handle the rapid advancements in AI technology. There's a growing call for regulations that specifically address AI-generated content, especially when it involves individuals without their permission. This is, you know, a crucial step to ensure that privacy rights are upheld in the digital realm, as we see, you know, more and more AI tools being released.

Educating ourselves and others about these risks is also a very important part of protecting digital privacy. Understanding how AI can be used, both for good and for potential harm, helps us make more informed choices about what we share online and how we interact with digital content. It's about being, you know, smart digital citizens. Just like checking a "site" for information, we need to check our own digital habits to keep our privacy secure.

Responsible AI Use and Digital Citizenship

Talking about responsible AI use is, you know, something everyone needs to do, from developers creating these tools to the people using them every day. It means, first and foremost, thinking about the ethical consequences of what we create and share online. For AI developers, it's about building safeguards and ethical guidelines into their algorithms from the very beginning, ensuring their technology isn't easily misused. This is, you know, a very big responsibility for them.

For users, responsible digital citizenship means, you see, being critical consumers of information. If you come across an image or video that seems suspicious or too good/bad to be true, it's a good idea to, like, question its authenticity. Don't just share things without a second thought. It's also about respecting others' privacy and not participating in the creation or spread of non-consensual or harmful content. This is, honestly, a basic rule for being online.

Supporting policies and initiatives that promote ethical AI development and digital literacy is also, you know, a big part of this. This could involve advocating for stronger privacy laws or backing organizations that educate the public about AI risks and benefits. It's a collective effort, really, to shape a digital future where technology serves humanity positively, rather than causing harm. We want to, you know, avoid the "hassle" of dealing with widespread digital harm later on.

Learning from past mistakes is, perhaps, another key aspect. We've seen, more or less, how quickly technology can get ahead of our ethical considerations. With AI, we have a chance to be more proactive, to anticipate potential issues and address them before they become widespread problems. This means, you know, constantly reviewing and updating our understanding of what responsible AI use looks like, especially as AI continues to evolve. It's about being "capable" of handling these new challenges, as a society.

Positive Applications of AI in Content Creation

While we've been discussing some of the serious concerns, it's also important to remember that AI has, you know, many incredible positive applications in content creation. Beyond the controversial uses, AI is truly changing how artists, designers, and creators work, offering new possibilities that were unimaginable just a few years ago. It's, honestly, a very exciting time for digital creativity.

For example, AI can help with things like image restoration, making old, damaged photos look brand new again. It can also assist with graphic design, generating different layouts or color palettes very quickly, which, you know, saves a lot of time. In video production, AI can help with editing, adding special effects, or even generating realistic background scenes. These tools, you see, are like powerful assistants for creative professionals.

AI is also being used to create entirely new forms of art. Generative adversarial networks, or GANs, for instance, can produce unique images, music, and even written pieces that are, you know, truly original. This opens up a whole new avenue for artistic expression, allowing creators to explore concepts and styles that might be too complex or time-consuming to achieve manually. It's, honestly, a fascinating intersection of technology and creativity.

Furthermore, AI can make content creation more accessible. Tools that automate complex tasks or provide intelligent suggestions can help people who might not have extensive technical skills still produce high-quality digital content. This means, in a way, more voices and perspectives can be shared online, enriching our collective digital experience. We want to, you know, encourage these kinds of positive "promotions" of AI use, focusing on its benefits for everyone.

The Future Outlook for AI and Digital Content

The future of AI and digital content is, you know, undoubtedly going to be very dynamic and full of new developments. We're seeing, more or less, constant advancements in AI capabilities, and these will continue to reshape how we create, consume, and interact with digital media. It's a journey that, honestly, requires ongoing attention and adaptation from all of us.

One trend we can expect is, perhaps, even more sophisticated AI tools that can generate and manipulate content with greater realism and speed. This means the lines between real and AI-generated content will likely become even blurrier. This calls for, you know, continued innovation in detection technologies, so we can identify manipulated media more effectively. It's like, a constant back-and-forth between creation and verification.

There will also be, you see, an increasing focus on ethical AI development. As the public becomes more aware of the potential harms, there will be greater pressure on companies and researchers to build AI responsibly, with privacy and consent at the forefront. This could lead to new industry standards, certifications, and even, perhaps, more government regulations. It's about ensuring that the "maximum quality" of AI also includes its ethical standing.

Education will play a crucial role in preparing us for this future. Teaching digital literacy, critical thinking, and ethical considerations around AI from a young age will be, you know, very important. It's about empowering individuals to navigate the complex digital landscape safely and responsibly. Just like how a "video" can teach us something new, continuous learning about AI is essential for everyone. We've "loved" how much AI has advanced, but we also need to address the "issues" that come with it.

Frequently Asked Questions

What are the main risks associated with 'undress AI mod IPA'?

The main risks, you know, revolve around privacy violations and the creation of non-consensual intimate imagery. Such tools can be used to digitally alter images of individuals without their permission, leading to severe emotional distress, reputational damage, and even legal consequences for the victims. It also contributes, in a way, to the spread of deepfakes and misinformation, making it harder to trust what we see online. The "trouble" these tools can cause is, honestly, quite significant.

How can I protect myself from unwanted AI image manipulation?

Protecting yourself involves, you know, being mindful of what you share online. Limiting the visibility of your personal photos on social media, using strong privacy settings, and being cautious about sharing images with unknown parties can help. Also, staying informed about the capabilities of AI tools and understanding the risks associated with them is, perhaps, a very good idea. If you see something suspicious, it's always wise to, like, check its source. You can learn more about AI ethics and digital rights from reputable organizations.

Are there any positive uses for AI that can edit images?

Absolutely, yes! AI has, you know, many beneficial applications in image editing that are widely used. For instance, AI helps with photo enhancement, like improving colors or sharpness, and it can automatically remove backgrounds or unwanted objects from pictures. It's also used in professional fields for things like medical imaging analysis, architectural visualization, and even creating realistic virtual environments for games or movies. These are, in a way, just a few examples of how AI can be a powerful and helpful tool for creators and professionals alike. We can also, you know, learn more about positive AI applications on our site, and link to this page ethical AI guidelines.

Conclusion

So, we've talked quite a bit about 'undress AI mod IPA' and what it represents in the larger conversation about AI and digital content. It's clear that while AI offers amazing possibilities for creativity and innovation, it also brings with it some very serious ethical questions, particularly around privacy and consent. The discussions about these tools are, you know, incredibly important for shaping our digital future responsibly. It's about understanding the capabilities, recognizing the potential harms, and working together to ensure technology serves us positively.

As AI continues to evolve, it's up to all of us—developers, users, and policymakers—to approach these advancements with care and foresight. We need to, you know, champion ethical development, promote digital literacy, and advocate for strong protections for individuals online. By focusing on responsible use and open dialogue, we can, perhaps, navigate the complexities of AI-generated content and build a more secure and respectful digital world for everyone, today, which is, honestly, a very good goal to have.

Related Resources:

Detail Author:

- Name : Mya Kuhic

- Username : samara.hansen

- Email : carter.burdette@wyman.com

- Birthdate : 1972-07-12

- Address : 7611 Gillian Prairie South Enoch, NY 92929-9500

- Phone : +1-651-574-4580

- Company : Gibson LLC

- Job : Board Of Directors

- Bio : Accusamus ut consequatur atque. Ullam quia sed aut eveniet impedit et repellat. Harum est itaque vero eum ut illum autem omnis. Quia placeat labore dicta eveniet.

Socials

tiktok:

- url : https://tiktok.com/@dagmar.ward

- username : dagmar.ward

- bio : Qui magni dolorem sit at eos at quam. Natus dolorem possimus ea quibusdam.

- followers : 3133

- following : 1016

twitter:

- url : https://twitter.com/dagmar9966

- username : dagmar9966

- bio : In nemo possimus molestias et. Non voluptates quam cum sint vitae. Placeat perspiciatis quia blanditiis quasi. Doloremque enim velit magnam.

- followers : 6323

- following : 2412

instagram:

- url : https://instagram.com/ward2007

- username : ward2007

- bio : Sit quia pariatur eveniet cupiditate. Laborum esse qui delectus corporis.

- followers : 3339

- following : 1726

linkedin:

- url : https://linkedin.com/in/dagmar_dev

- username : dagmar_dev

- bio : Est vel saepe minima quis sunt dignissimos.

- followers : 1020

- following : 274

facebook:

- url : https://facebook.com/ward2003

- username : ward2003

- bio : Pariatur ut eligendi unde ut quia. Ex excepturi provident non impedit est quae.

- followers : 1299

- following : 2125