It's a really interesting time for technology, and a lot of new things are popping up, especially with artificial intelligence. People are hearing more and more about AI that can change pictures, and sometimes, this talk includes apps that claim to "undress" images. This kind of app is a big topic right now, and it brings up some very important questions about privacy, what's right, and how technology should be used.

When folks search for "undress ai download app," they might be curious about how these tools work, or perhaps they are worried about the potential harm they could cause. It's pretty clear that these kinds of applications touch on some sensitive areas. We want to help you understand what's really going on with these apps, so you can be better informed.

This article will look at the technology involved, the serious concerns about ethics, and what this all means for your safety online. So, in a way, we're here to talk about a very specific kind of AI tool and the bigger picture around it. Knowing more about this can help everyone make better choices and stay safer on the internet, too it's almost a must these days.

- Gunther Eagleman Reddit

- Caylee Pendergrass Bio

- Mayme Hatcher Johnson Frank Lucas

- How Many Kids Does Karissa Stevens Have

- Mayme Hatcher Johnson Ethnicity

Table of Contents

- What Are These Apps Claiming to Do?

- How the Technology Works (Generally Speaking)

- Ethical and Societal Concerns

- Legal Landscape and Risks

- Protecting Yourself Online

- Frequently Asked Questions About Undress AI Apps

- Staying Informed and Acting Responsibly

What Are These Apps Claiming to Do?

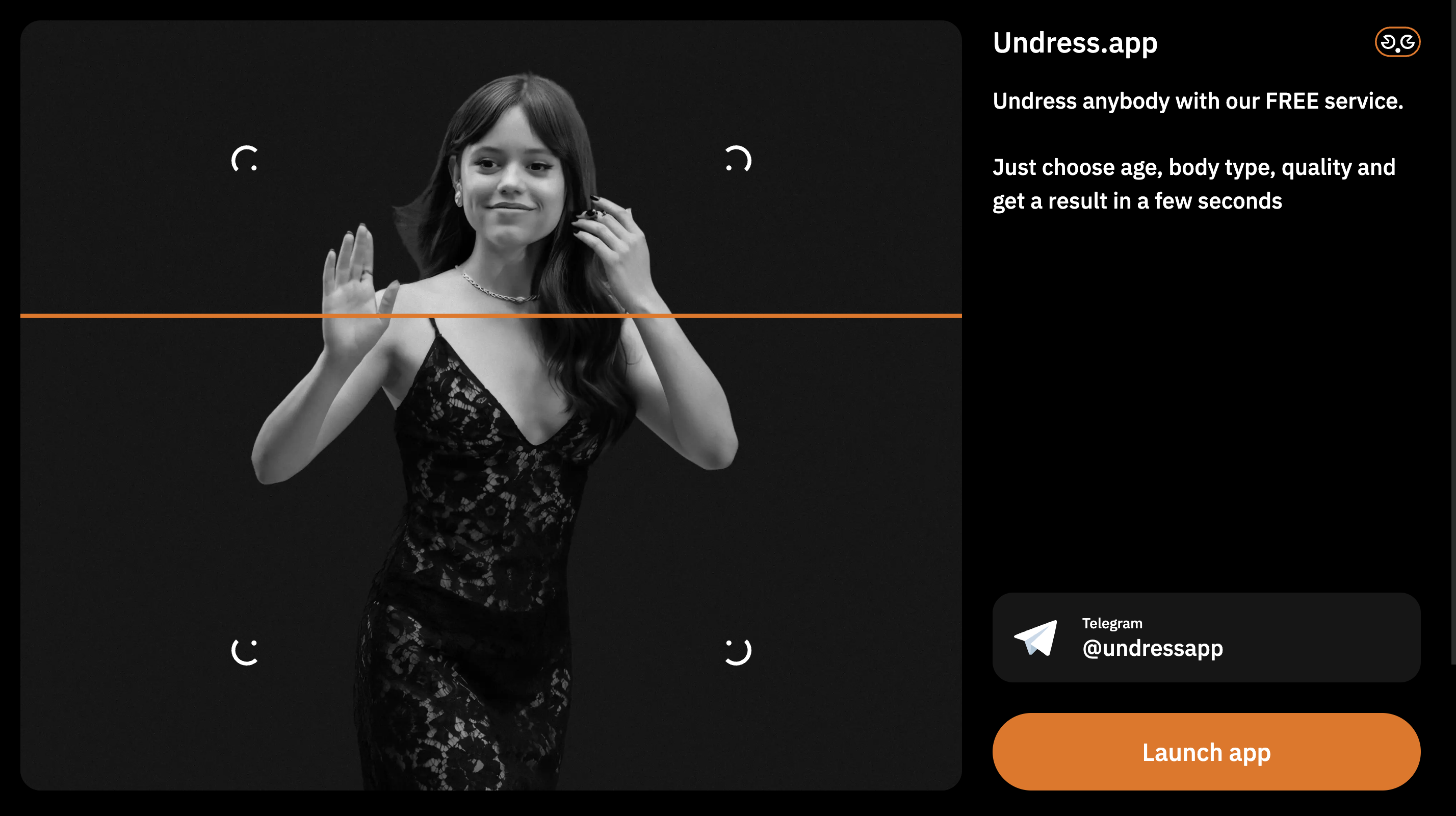

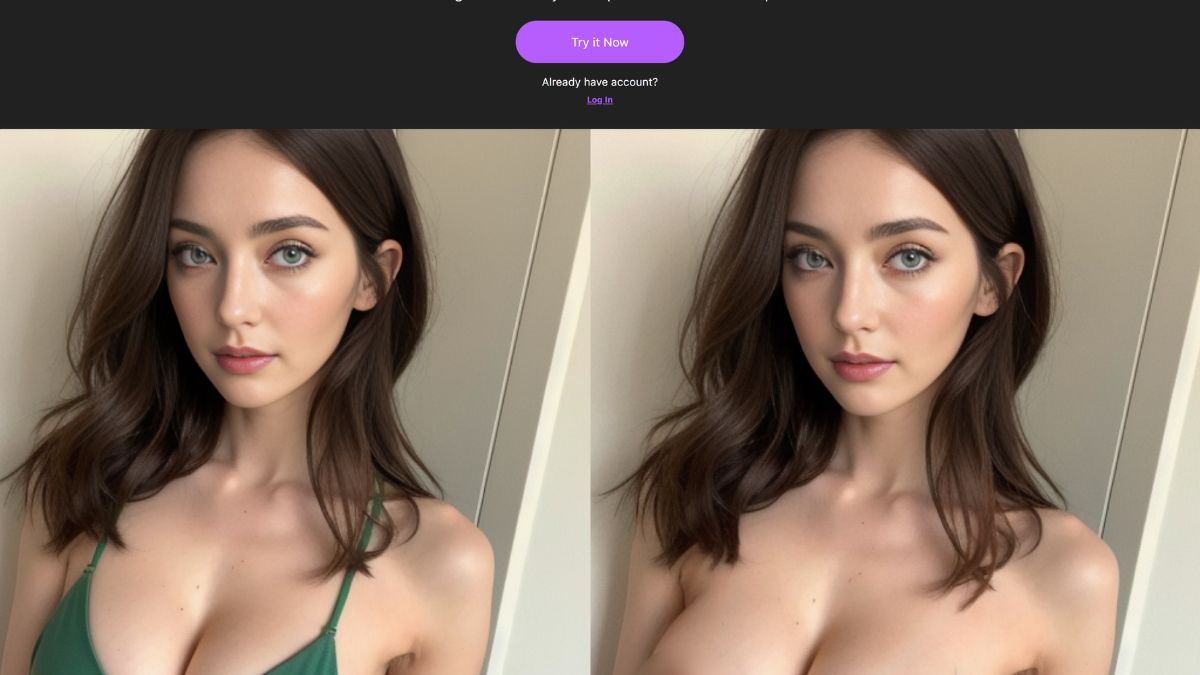

When people talk about an "undress ai download app," they're usually referring to software that uses artificial intelligence to change images of people. The main idea is that these apps can remove clothes from a picture, making it look like the person is not wearing anything. This is done through complex computer programs that have been trained on lots of images. It's a bit like a digital artist, but one that works automatically, you know?

These applications are often promoted as tools for "fun" or "experimentation." However, their true nature often involves creating very realistic, yet completely fake, images. It's important to remember that the results are not real photographs. They are computer-generated fakes. This distinction is pretty important, as a matter of fact, for everyone to grasp.

The apps might promise quick and easy ways to transform pictures. But, it's really important to look beyond the surface. We need to think about the bigger implications of such technology. What does it mean when anyone can create these kinds of images? That's a question we should all consider, honestly.

- Who In Hollywood Has The Most Expensive Engagement Ring

- Emily Compagno Height

- Gunther Eagleman Swatted

- Are Turkish People Oghuz Turks

- How Did Meghan Markle Alter Her Engagement Ring

How the Technology Works (Generally Speaking)

The core of an "undress ai download app" relies on something called generative adversarial networks, or GANs. These are a type of AI system that can create new data, like images, that look very real. It's a rather clever way computers learn to make things. This technology is pretty advanced, you know, and it keeps getting better.

Think of it like two parts of the AI working together. One part tries to make a fake image, and the other part tries to tell if it's fake or real. They keep practicing until the fake images are so good that the second part can't tell the difference. This process allows for the creation of very convincing visual content. It's actually quite fascinating, how it learns.

This technology is also used for many good things, like making old photos look new or creating realistic characters in video games. But when it's used to create images that violate someone's privacy, that's where the big problems start. It's the same tool, but used for a very different purpose, so to speak.

AI and Image Creation

AI models learn by looking at huge amounts of data. For image creation, this means they've seen millions of pictures. They learn patterns, shapes, and textures. This helps them understand how things look in the real world. They can then use this knowledge to generate new images that fit those learned patterns. It's a bit like a student learning from many examples, really.

When you give an AI an image, it uses its training to predict what certain parts of the image should look like if they were different. For example, if it's trained on many faces, it can make a face look older or younger. This ability to predict and fill in details is key to how these apps operate. It's pretty amazing what computers can do, basically.

The quality of the output depends a lot on the quality and amount of data the AI was trained on. More diverse and high-quality training data usually leads to more realistic and detailed results. This is why some AI-generated images look incredibly real, you know, almost like a photograph.

The "Undressing" Process

For an "undress ai download app," the AI has likely been trained on datasets that include images of people with and without clothes. This allows the AI to learn how to "replace" clothing with skin or other body parts. It's not actually removing anything, but rather generating new pixels in place of the old ones. It's a kind of digital illusion, you could say.

The process usually involves identifying the person in the image and then applying a transformation. The AI tries to make the new parts look natural, matching skin tone, shadows, and body shape. This is where the advanced algorithms come into play. They try to make it look as seamless as possible, you know, blending it all together.

However, these results are still often imperfect. There can be strange distortions, unnatural textures, or parts that just don't look quite right. But even with imperfections, the very idea of creating such images raises serious questions. It's a really big deal, actually, when you think about it.

Ethical and Societal Concerns

The existence of "undress ai download app" tools brings up many serious ethical concerns. These are not just minor issues; they touch on fundamental rights and societal well-being. It's very important to talk about these things openly. We need to understand the impact these apps can have, you know, on real people.

The main problem is the potential for harm to individuals. When images are altered without consent, it can lead to emotional distress, reputational damage, and even safety risks. This kind of technology can be misused in very harmful ways. It's a pretty serious matter, honestly, for everyone involved.

Beyond individual harm, there are broader societal worries. These apps can contribute to a culture where digital images are not trusted, and where people's bodies are objectified without their permission. This has long-term consequences for how we interact online and how we view each other. It's something we should all be quite concerned about, really.

Privacy Violations

One of the biggest concerns is the massive violation of privacy. When someone's image is altered using an "undress ai download app" without their knowledge or permission, it's a direct attack on their personal space. Their image is being used in a way they never agreed to. This is a clear breach of personal boundaries, you know, and it feels very wrong.

People have a right to control how their image is used. These apps take that control away. The altered images can then be shared widely, causing immense distress to the person depicted. It's a very intrusive act, to be honest, and it can have lasting effects on someone's life.

This type of image manipulation can make people feel unsafe online. They might worry that any picture they share could be misused. This erodes trust in digital platforms and makes people less willing to share aspects of their lives. It's a pretty big problem for the whole online community, actually.

Consent Issues

Consent is a really big deal when it comes to images of people. For "undress ai download app" tools, consent is almost always missing. The person in the picture did not agree to have their image altered in this way. This makes the creation and sharing of such images deeply unethical. It's a fundamental breach of respect, you know, for another person.

Without consent, these images are a form of digital abuse. They can be used to harass, intimidate, or shame individuals. This is particularly harmful when the images target specific groups or individuals. It's a very serious ethical lapse, basically, and it needs to be recognized as such.

The lack of consent also means that victims have little recourse once the images are out there. The damage is often done before they even know about it. This highlights the need for strong legal protections and ethical guidelines for AI technology. It's something that society needs to address, quite urgently, you know.

Misinformation and Harm

These apps can also be used to spread false information. An altered image, even if imperfect, can be presented as real, leading to misunderstandings or malicious rumors. This can cause significant harm to a person's reputation and relationships. It's a very deceptive practice, really, and it can be quite damaging.

The harm isn't just about privacy; it's also about psychological impact. Discovering that a fake, intimate image of yourself exists and is being shared can be incredibly traumatic. It can lead to anxiety, depression, and a feeling of powerlessness. This is a very real and painful consequence for victims, you know, and it's something we should all be aware of.

Furthermore, the existence of such tools normalizes the idea of non-consensual image manipulation. This can lead to a broader acceptance of harmful digital practices. It's a slippery slope, you know, and it can affect how people view privacy and respect online. We need to be very careful about what we normalize, actually.

Legal Landscape and Risks

The legal situation around "undress ai download app" technology is still developing. Laws are trying to catch up with how fast AI is changing things. What might be illegal in one place could be a gray area in another. This makes it a bit complicated, you know, for everyone trying to understand the rules.

However, many countries are starting to recognize the severe harm caused by non-consensual deepfakes and similar image manipulations. New laws are being proposed or put into place to address these issues. It's a really important step, honestly, to protect people.

Even if a specific law doesn't exist for "undress AI" directly, other laws, like those about harassment, defamation, or child exploitation, might apply. The legal risks for creators and sharers of such content can be very high. It's not something to take lightly, basically, as the consequences can be quite serious.

Is It Legal?

The legality of an "undress ai download app" depends heavily on where you are and how the app is used. In many places, creating or sharing non-consensual intimate images, even if they are fake, is illegal. This falls under laws related to revenge porn, harassment, or the misuse of personal data. It's a very clear line, you know, that should not be crossed.

Some jurisdictions have specific laws against deepfakes or synthetic media that are created without consent. These laws aim to protect individuals from digital harm. It's important to check the specific laws in your area. This is not just a moral issue, but a legal one, too it's almost certain to have legal repercussions.

Even if the act of *downloading* the app isn't explicitly illegal, the *use* of it to create or share harmful content almost certainly is. Ignorance of the law is generally not an excuse. So, it's very important to be aware of the potential legal trouble. It's a big risk, basically, for anyone thinking about using such tools.

Potential Consequences

The consequences for creating or sharing non-consensual "undress AI" images can be severe. This includes criminal charges, which could lead to fines or even prison time. Victims can also pursue civil lawsuits for damages, such as emotional distress or reputational harm. It's a very serious matter, you know, with real-world penalties.

Beyond legal repercussions, there are also social and professional consequences. Someone found to be involved in such activities could face public backlash, loss of employment, or damage to their personal relationships. The internet remembers things, and actions like these can follow a person for a long time. It's a pretty big deal, honestly, for someone's future.

For platforms that host these apps or images, there's also a risk of legal action and reputational damage. Many platforms are working hard to remove such content and ban users who create or share it. They are taking this seriously, you know, to protect their users and their own standing. It's a collective effort, basically, to combat this kind of harm.

Reporting and Support

If you or someone you know is a victim of non-consensual image manipulation, it's very important to know that help is available. You can report the content to the platform where it's hosted. Most social media sites and image-sharing platforms have clear policies against such material. They usually have a way to report it, you know, pretty quickly.

You should also consider contacting law enforcement. Providing them with all the details, including screenshots and links, can help with investigations. There are also organizations and support groups that specialize in helping victims of online harassment and image abuse. They can offer emotional support and guidance. It's really important to reach out for help, basically, if you need it.

Remember, you are not alone, and it's not your fault. Taking action can help remove the harmful content and prevent further spread. It's a brave step to take, you know, and it can make a big difference. We all need to work together to make the internet a safer place, honestly.

Protecting Yourself Online

In a world where AI can alter images, protecting yourself online becomes even more important. It's about being smart with what you share and how you interact with digital content. You can learn more about digital safety on our site, which is pretty useful. Being proactive can help you avoid becoming a target. It's a very good habit to develop, basically, for your online life.

It's not just about what you post, but also about how you view things you see. Developing a critical eye for images and videos is key. Not everything you see online is real, and that's a very important lesson to remember. This kind of awareness is a strong shield, you know, against digital deception.

Educating yourself and others about the risks of AI image manipulation is also a powerful step. The more people understand these technologies, the better equipped we all are to combat their misuse. It's a community effort, really, to keep everyone safe.

Digital Literacy

Being digitally literate means understanding how technology works and how it can be used, both for good and for bad. It involves recognizing fake content, understanding privacy settings, and knowing what to do if you encounter harmful material. This knowledge is a very important tool in today's digital world. It's something everyone should try to learn, you know, as much as they can.

Teach yourself to question images and videos that seem too perfect or too shocking. Look for signs of manipulation, like strange lighting, blurry edges, or unnatural movements. Sometimes, a quick search can help verify if something is real. It's a good practice to develop, honestly, for anything you see online.

Staying informed about new technologies, like "undress ai download app" tools, helps you understand the evolving risks. Follow reputable tech news sources and cybersecurity experts. This helps you keep up with the latest threats and protective measures. It's a continuous learning process, basically, for everyone online.

Secure Practices

Review your privacy settings on social media and other online platforms. Limit who can see your photos and personal information. The less accessible your images are, the less likely they are to be used for malicious purposes. This is a pretty simple step, you know, but it can make a big difference.

Be careful about what photos you share publicly. Think twice before posting pictures that could potentially be misused or altered. Even seemingly innocent photos can be targets for AI manipulation. It's a good idea to be a little cautious, really, with what you put out there.

Finally, support efforts to create stronger laws and ethical guidelines for AI. Your voice matters in shaping a safer digital future. You can also learn more about online safety practices on our site. By working together, we can help ensure that technology serves humanity, rather than harming it. It's a very important goal, basically, for all of us.

Frequently Asked Questions About Undress AI Apps

People often have many questions about these kinds of apps, and that's understandable. We'll try to answer some common ones here. This information might help clear up some confusion, you know, for those who are curious.

Is "undress AI" legal?

Using an "undress ai download app" to create or share fake intimate images of someone without their consent is generally illegal in many parts of the world. Laws are getting stronger to punish this kind of misuse. It's a very serious legal matter, basically, and it carries real risks.

How do these "undress AI" apps work?

These apps use advanced AI, like generative adversarial networks (GANs), to create new image data. They don't actually "remove" clothes but rather generate new pixels to make it look like someone is undressed. It's a form of digital fabrication, you know, not real photography.

What are the risks of using or encountering "undress AI" images?

The risks are very significant. For victims, it can lead to severe emotional distress, damage to reputation, and even legal issues. For those who create or share such images, there can be serious legal consequences, including criminal charges. It's a pretty dangerous path, honestly, for everyone involved.

Staying Informed and Acting Responsibly

The rise of technologies like the "undress ai download app" shows us how quickly things can change in the digital world. It highlights the need for everyone to be more aware and responsible online. Knowing about these tools and their potential for harm is the first step toward protecting yourself and others. It's a really important bit of knowledge, you know, for modern life.

It's not just about avoiding these apps, but also about promoting a culture of respect and consent in all digital interactions. We all have a part to play in making the internet a safer place. This means speaking up against misuse and supporting victims. It's a collective responsibility, basically, that we all share.

By understanding the technology, the ethical issues, and the legal implications, you can make better choices. You can also help educate those around you. This kind of shared knowledge is very powerful. It helps us build a more secure and ethical online environment, you know, for everyone. We need to keep this conversation going, actually, to make sure we're all

Related Resources:

Detail Author:

- Name : Frida Reynolds IV

- Username : eulah.lesch

- Email : shaylee16@yahoo.com

- Birthdate : 1983-07-17

- Address : 4787 Matilda Valleys South Jacintheport, VT 53370

- Phone : (541) 422-4673

- Company : Ortiz Ltd

- Job : Separating Machine Operators

- Bio : Modi dicta iure qui eligendi. Mollitia quas aut facilis reiciendis recusandae. Optio nulla illum est quia.

Socials

twitter:

- url : https://twitter.com/tyreek_dev

- username : tyreek_dev

- bio : Commodi molestiae ducimus est et earum est recusandae. Eveniet voluptas autem laudantium sapiente suscipit aut reiciendis.

- followers : 1820

- following : 1699

linkedin:

- url : https://linkedin.com/in/tdurgan

- username : tdurgan

- bio : Est qui aut numquam inventore ipsum et explicabo.

- followers : 2374

- following : 1803

instagram:

- url : https://instagram.com/tyreek4260

- username : tyreek4260

- bio : Quas dignissimos omnis sint enim. Voluptatem ipsa ut ut enim. Magni aut natus quia culpa nulla.

- followers : 2838

- following : 1288

facebook:

- url : https://facebook.com/tyreek9688

- username : tyreek9688

- bio : Ut eos temporibus aut aut. Ipsum libero ab dolore in aut commodi.

- followers : 1795

- following : 2960

tiktok:

- url : https://tiktok.com/@tdurgan

- username : tdurgan

- bio : Sunt aut et eius rerum dolore maxime.

- followers : 1693

- following : 1958